The mantra ‘data is the new oil’ may sound corny, but it is an essential truth. Just like oil, development data goes through a complex value chain: from collection, processing and analysis to storage and utilisation.

Collection

Processing

Transport

Analysis

Storage

Usage

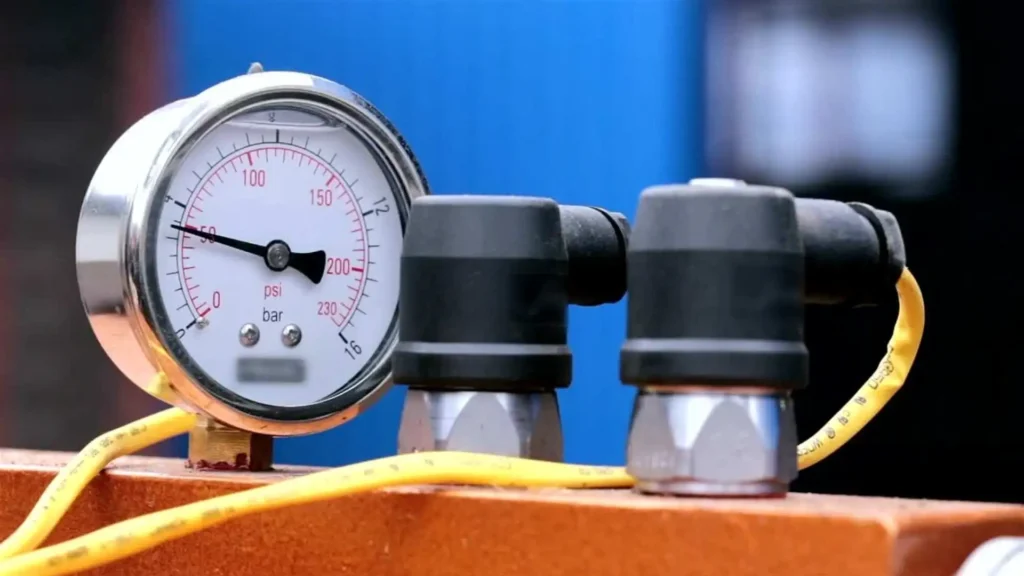

Ensure data quality and integrity: The quality of the raw data (accuracy, consistency, completeness, data rate and timeliness) determines the upper limit of data quality in the entire data value chain.

Homogenise: Different sensor types and technologies require specific implementations and calibrations.

The survey therefore requires homogenisation of the recorded variables to ensure that all data is consistent and comparable. The use of different protocols and standards can make interoperability considerably more difficult.

Consider economic efficiency: Sensors and associated hardware can be expensive. Digital surveys can also be costly as they require significant computing resources, especially when high accuracy and reliability are required. The cost-effectiveness of data collection must therefore be carefully considered to optimise the balance between costs and benefits.

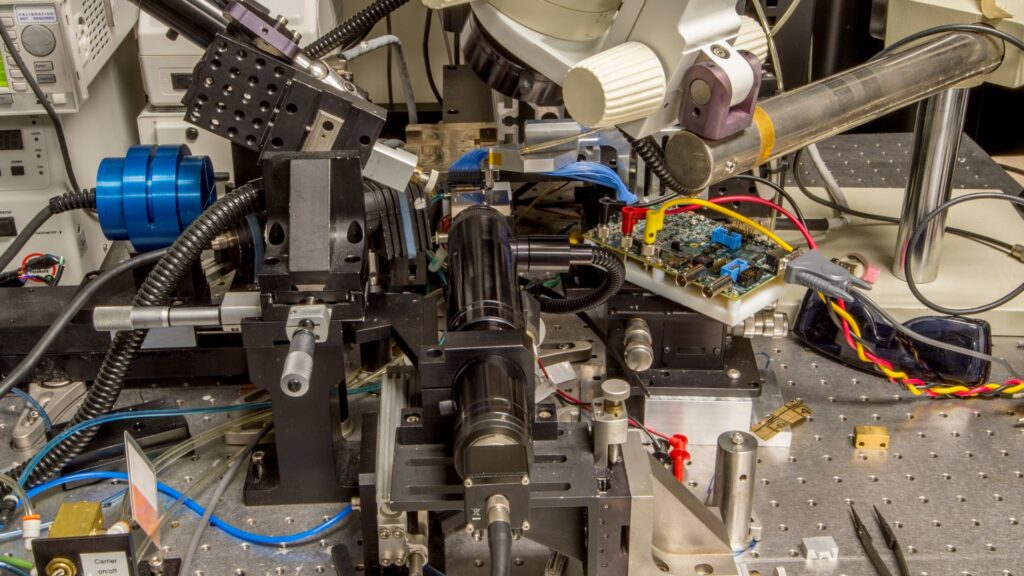

Contextualise: Conversion of raw data into usable measured values by adding contextual information such as time, unit and name. This contextualisation makes the data usable.

Pre-processing: Local preparation of the data for utilisation through filtering, transformation, encryption and compression. Selecting and configuring the signals to be measured and the parameters to be set is just as complex as adapting the data preparation processes to changing requirements and environments.

Commissioning: Collecting and merging data and handling input and output interfaces. Process variables, signals and parameters are often difficult to access. Different interfaces and heterogeneous systems require specific methods and solutions.

Transfer: Data must be transmitted via suitable hardware interfaces and communication elements. This hardware can be expensive and should therefore only be installed if it is really needed.

The variety of technologies can lead to a certain inflexibility once you have decided on an interface.

Calculate: Data is transmitted via protocols and communication stacks. These need to be calculated and processed, which can exceed the capacity of the computing unit used, especially in the development phase when more data is required. It must be ensured that sufficient bandwidth is available to transfer all data at the required speed and to avoid bottlenecks that could slow down or interrupt data transfer.

Secure: The integrity and security of data during transport must be guaranteed. This includes protection against data loss, falsification and unauthorised access, which is often achieved through encryption and secure protocols. Latency and packet loss must be avoided, especially in real-time applications.

Visualise: The data must be presented in such a way that its informative value can be optimised for the respective issue. The performance of the visualisation must ensure that it can be generated quickly and efficiently, even with large volumes of data. Different data sources and communication protocols (‘languages’) must be integrated simultaneously and displayed together in a flexibly configurable manner.

Analyse: The processed data is analysed in detail in order to identify and measure deviations, anomalies, trends and correlations. It is desirable to be able to adapt analysis configurations without great effort in order to be able to react flexibly to changing requirements. The assignment to events is made easier by recognising them. If only relevant data is recorded automatically, the analysis effort is reduced.

OptimizeOptimization measures are implemented on the basis of the analysis results in order to improve the performance and efficiency of the systems. This can include the adjustment of control parameters, the introduction of new elements or the optimization of existing processes. If parameters can be adjusted in real time and while the system is running and the device does not have to be reprogrammed for every change, the effort required is significantly reduced.

Organize: Data must be stored in a structured and organized manner to ensure its accessibility and usability. This includes organizing the data in databases, using metadata and ensuring that the data is easy to find and retrieve. It is important to guarantee data integrity and ensure efficient indexing and fast retrieval times.

Archive: Data should be stored in such a way that it is accessible and secure for the long term. This includes storing historical data, providing access controls and ensuring data security. Ensuring data security and confidentiality, meeting compliance requirements and efficient data archiving and recovery are key challenges here.

Manage: Data must be organized in such a way that it can be reused for different applications and analyses. This includes the standardization of data formats and the provision of interfaces for data access. It is important to ensure data consistency across different applications and to handle data changes and updates efficiently.

Quality assurance: Data is used to monitor and ensure the quality of processes and products. This includes carrying out quality checks, identifying deviations and implementing measures to rectify errors. Continuous monitoring enables problems to be identified and rectified at an early stage, thereby improving quality assurance.

Control in operation: The data collected is used to control and regulate the operation of the systems. This includes the adjustment of control parameters, the implementation of control loops and real-time monitoring of the system status. By using data, operating processes can be optimized and efficiency increased.

Monitoring and management during operation: Continuous monitoring and management of operational data is critical to assess the health and performance of systems. This includes in-life data management and predictive maintenance, where data is used to monitor the condition of the system and plan predictive maintenance actions. Efficient management of large amounts of data and ensuring data continuity and accuracy are central to this.

es:scope® is a registered trademark of es:saar GmbH. All Rights reserved.